Uncover and quantify Facebook's use of sensitive personal data in advertising campaigns

Each of us, as individuals in social life, will inevitably be labelled, for example, people of large size will be labelled obese, and programmers will be labelled as grid shirt lovers. For individuals, these preference tags are a convenient way to distinguish between others, while for Internet companies, user preference data is a resource for targeted advertising business activities. Of course, targeted advertising naturally satisfies user preferences, generates profits for the company, and facilitates users, but if the preference data used for business activities is sensitive preference data, this is an invasion of user privacy. In.2018Years.5In June, the EU proposed and implemented sensitive personal informationGDPR(General Data Protection RegulationThis white paper. And in2017YearsFacebookHe was fined millions of euros by Spain's Data Protection Commission for allegedly violating EU data protection regulations imposed by Spain. Implementation and implementation of the white paperFacebookThe dramatic one-year difference in penalties can't help but be teasingFacebookThe process of EU privacy protection has been promoted.

In the white paper, personal sensitive data is divided into eight broad categories (health data, political opinions, sexual habits, religious beliefs, race, etc.). Some researchers try to expose and quantifyFacebookTheir research on the use of sensitive personal data in advertising campaigns can be summed up in: screening of sensitive preferences, quantification of sensitive preferences, and statistical analysis of sensitive preferences.

First, you need to knowFacebookHow to add a personal preference tag to a user. Preference labels come from six sources:

1. Preferences that users add themselves, such as nationality, school, age, etc.

2. The user is inFacebookactions related to certain preferences, such as blocking a topic, blocking other users, etc.;

3. Users click on ads related to certain preferences;

4. The user passesFacebookDownloaded in connection with certain preferencesAPP;

5. User-like page-related preferences;

6. Comments, retweets, likes, and other behaviors made by users are related to certain preferences.

Advertisers are based onFacebookAd management, the process of using user preferences to target ads is simple. Advertisers set their target users' regions and preferences, etc., published inFacebookAdvertising management system,FacebookAfter a successful push based on the advertiser's needs, a bill is issued for payment. When the preference in the advertising campaign is sensitive, the advertisement becomes a very serious violation of the user's privacy. It is worth mentioning that one of the researchers who carried out the study received itFacebookThe gay dating ad he pushed for found himself beingFacebookIt's labeled as a preference for homosexuality.

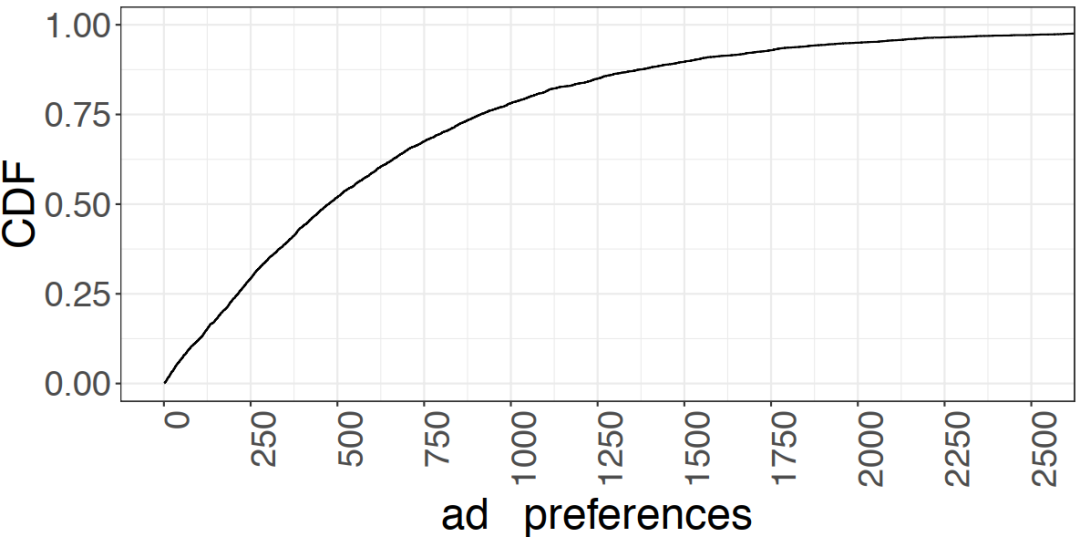

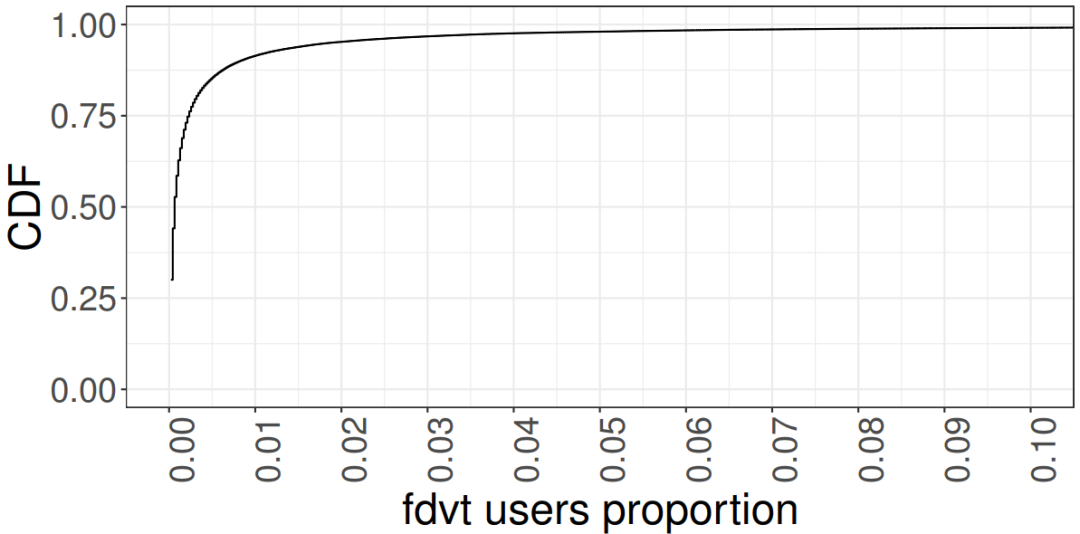

In the way research data are collected, researchers are clearly very thoughtful. The researchers developed a browser plug-in of their ownFDVTUsed for calculationsFacebookThe production benefits of content producers, while collecting user preference data with the user's permission. Through three years of operation, finally accumulated about4577A.Facebookusers, of which3166users from EU member states, and these user data contains approximately the preference tags5.5Mand different preference labels have126192A. The content of user data consists of six parts, one of which is the number of users corresponding to preferences, and the researchers rank preferences according to the number of users corresponding to each preference. Figure.1It is the result of the researcher's simple finishing analysis of the collected user data. are the number of preference tags per userCDFGraph, and the number of users corresponding to each preference label as a percentage of the number of usersCDFFigure. Figure.1(a)In,CDFThe median of50%The corresponding number of ad preferences is474That's what the researchers countedFDVTExists in the user50%The user has more tags than474A. Figure.1(b)In,CDFMedian50%The corresponding percentage of tag users is0.0006Times.FDVTThe total number of statistics is as a result3, that is, of all preferences, exist50%Preferences correspond to the maximum of only3users. This means thatFacebookA big part of a user's preference isFacebookLabels tailored to the user.

Then, to prove itFacebookThere is the use of sensitive user information for advertising, the researchers first provedFacebookThere is sensitive information about the preferences given to the user. To do this, the researchers used assignedFDVTThe user's126KDifferent ad preferences, and follow these two steps to filter your ad preferences for sensitive preferences.

As a first step, the researchers used natural language processing (NLPTechnology performs coarse-grained screening of preferences.The researchers are based onGDPReight sensitive information categories, passedwikiEncyclodedi collects some sensitive information keywords. Then take advantage of itdatamuseThis is used to search for similar wordsAPI, search and sort it out264sensitive information keywords. The next step is to calculate semantic similarity, which takes two inputs: fromFDVTThe data set126KAdvertising preferences and the sensitive categories considered264Keywords. All need to be used264keywords to calculate the semantic similarity of each ad preference. For each ad preference, record264the highest similarity value in the comparison operation. As a result of this process,126KEach preference in an ad preference is assigned a similarity score to indicate the likelihood that it will become a sensitive ad preference. The size range of semantic similarities is specified0To.1, the closer the ad preference score is1Indicates a higher similarity. By counting the similarity scores of all advertising preferences, the researchers set0.6is the score threshold. That is, if an ad preference has a similarity score greater than0.6, then the preference is sensitive.

The second step is to use the method of manual classification for fine-grained screening.The research team was recruited12group members, who are researchers (teachers and doctoral students) with knowledge of the field of privacy. Each team member filters the results from the coarse granularity above (4452manual classification of random samples (1000To.4452preference range). Ask them to category each ad preferenceGDPRof the8classified as non-sensitive if the group member considers the preference to be non-sensitive. Follow most of the voting methods that the researchers eventually get2091sensitive advertising preferences.

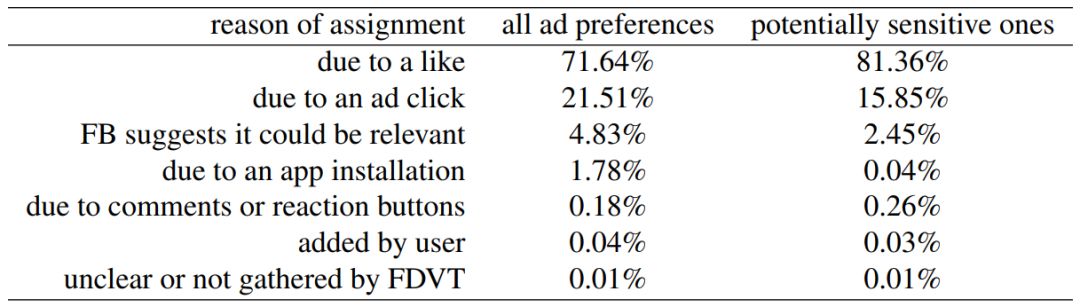

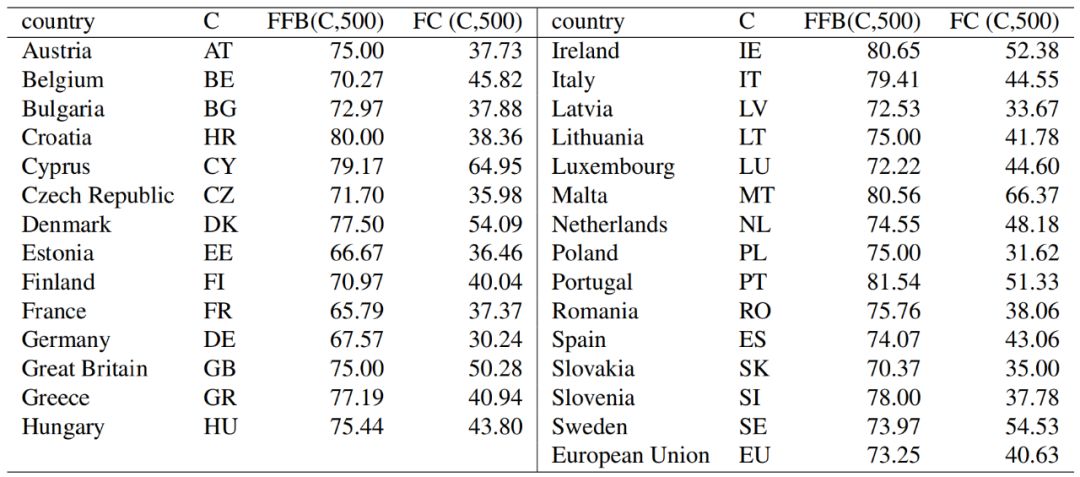

Table.1 FacebookAssign users a percentage of the six reasons for preference

For better statistical analysis of sensitive preferences, the researchers proposed twometricUsed for quantificationFacebookThe degree to which user-sensitive tablet preferences are utilized. The first onemetricIs.FFB(C, N), meaning: inCcountry, with the top rankingNthe sensitive preferences of the user inFacebookPercentage of total users. The other isFC(C, N): InCcountry, with the top rankingNthe proportion of sensitive preference users among national citizens. Then, the researchers countedFDVTThe percentage of users who are assigned preferences for reasons. Table.1The results show that most sensitive advertising preferences come fromFacebookAnalyzed user preferences (81%) or a user's preference for clicking on an ad (16%)。 Only a very small number of preferences (0.03%) comes from the userFacebookProvides a configuration that proactively includes potentially sensitive ad preferences in its list of ad preferences. This indicates thatFacebookUsers have very little control over their ad preferences. Typically, adding sensitive preferences to a user by analyzing user characteristics requires user permissionFacebookThere is virtually no such process in the course of operation.

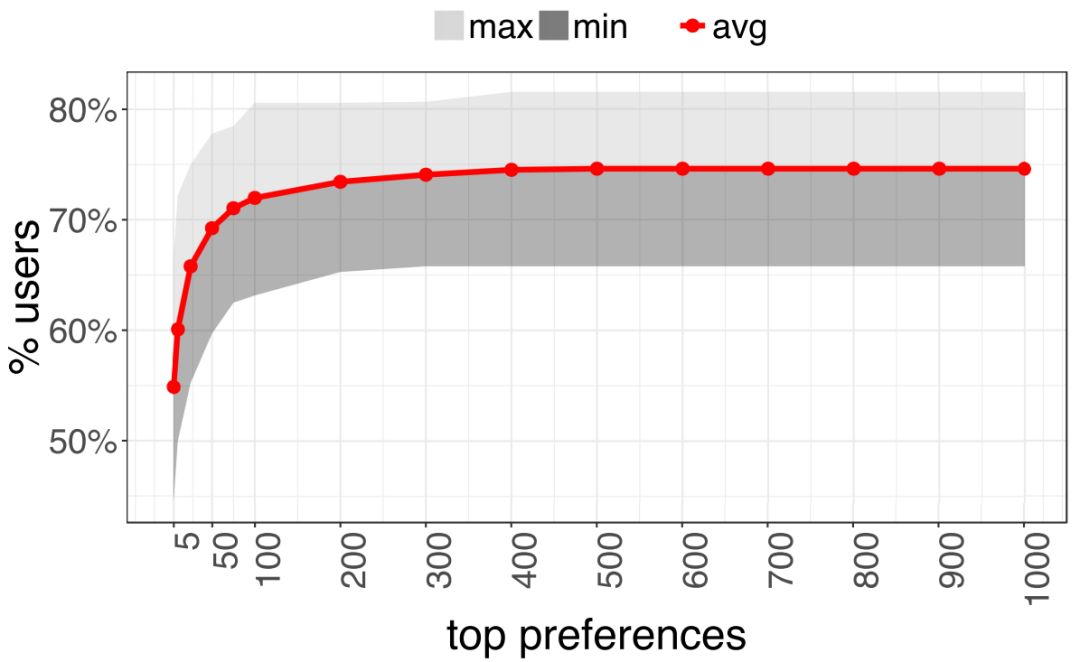

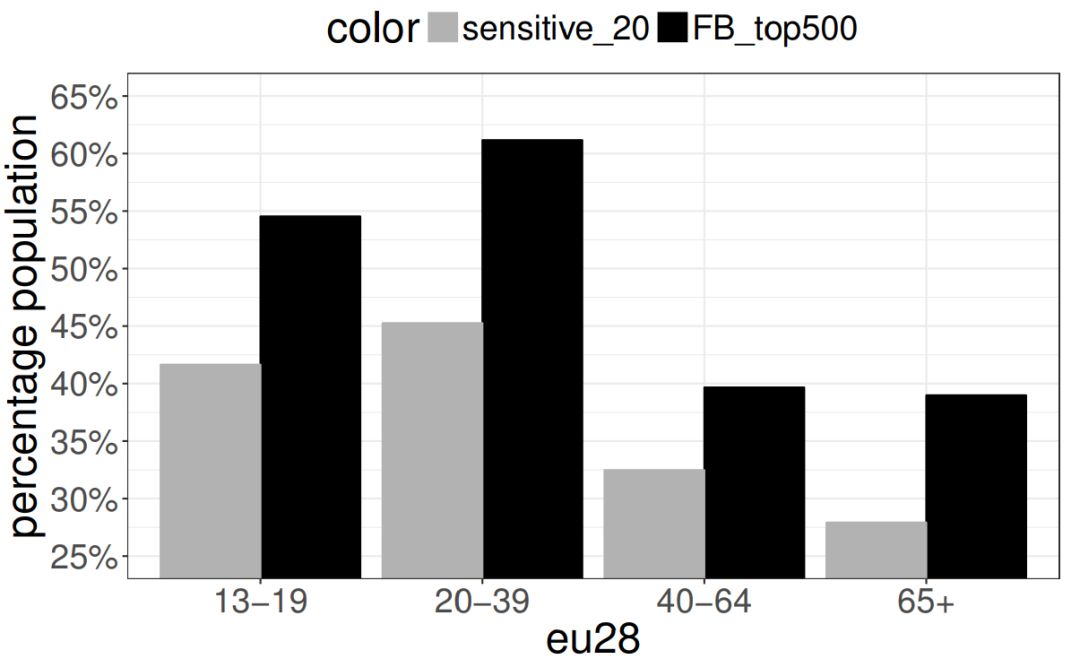

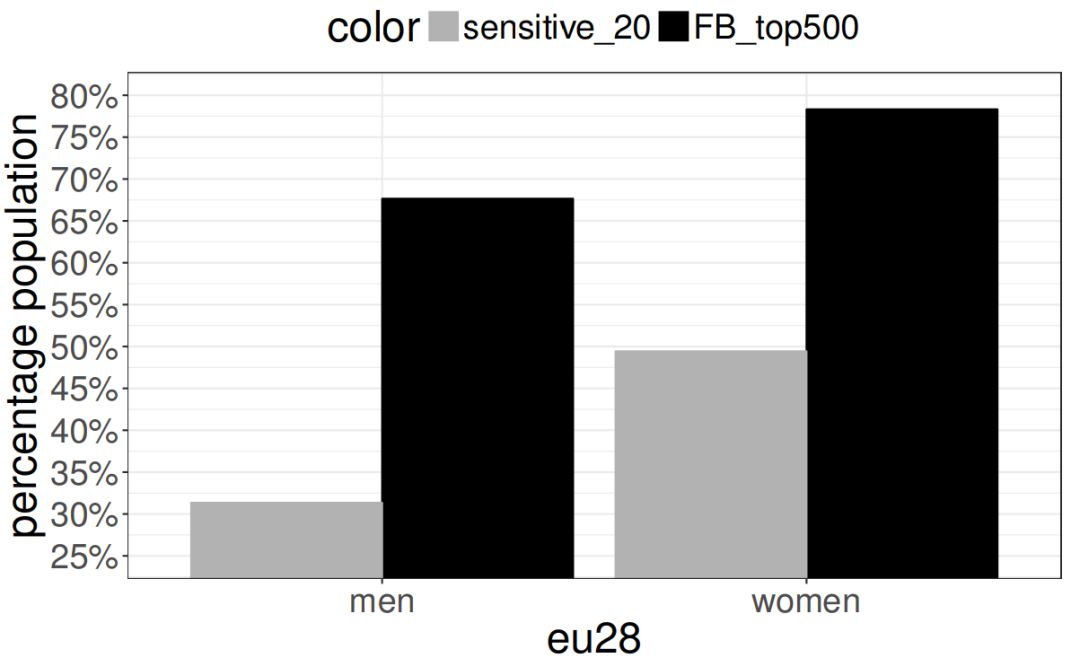

Next, the researchers looked at the European UnionFacebookA demographic analysis of the sensitive preferences of users, as well as EU citizens, was carried out. Figure.2The ordinate corresponds in60%The user's location, corresponding to the horizontal coordinates10。 This means that all the EUFacebookamong users, there is60%the user isFacebookPosted the top 10 sensitive advertising preferences. Table.2Show more detailed statistics, with the highest number of users500PreferencesFBPercentage of users. By comparing, it can be noted thatFBThe country with the lowest user share is France (65.79%), the largest number of countries is Portugal (81.54%), other countriesFBUsers have the top ranking500The percentage of preferences is in65.79%~81.54%。 In short, any country in the EU has one2/3Even moreFacebookThe user isFacebookAffixed with sensitive advertising preferences. To demonstrate the dangers of sensitive preferences, the researchers selected all sensitive preferences20Extremely sensitive preferences (illegal immigration, suicide, nationalism, women's rights, etc.) are used for subsequent statistical work. The researchers then analyzed sensitive preferences based on age and gender statistics. In statistics, the user's age is divided4Group: Teens (13~19), young adults (20~39), middle-aged and elderly (40~64and old age (65+)。 The statistical analysis is shown in the figure3(a)。 The results show that compared with the otherFacebookmore sensitive preferences“Favor.”Teenagers (13~19and young adults (20~39these two groups. And in the sex statistics chart3(b)The deviation between these groups is also reflected in the two groups of male women, who are more likely to be compared to menFacebookLabel sensitive preferences.

Figure.3(a) Percentage of sensitive preference users of different ages

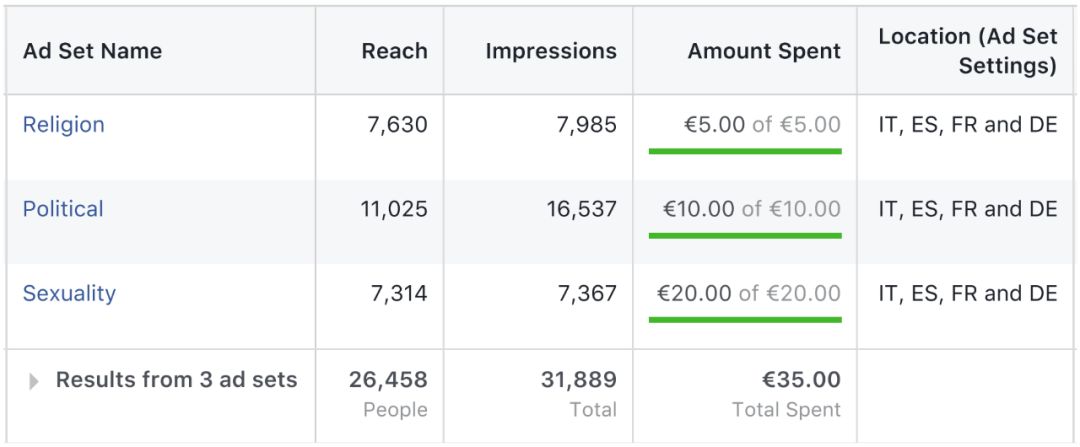

Finally, the researchers used it them themselfFacebookAd management system, did a real advertising campaign for sensitive information preferences. The researchers set the preferences of the target users to three groups of advertising experiments: religious (Islamic, Christian, Jewish, or Buddhist), political (anarchist, radical feminist, etc.), and sexual preference (bisexual and gay). The target user regions are Germany, France, Italy, and Spain. After the advertising demand was released, the researchers waited for several days and waited for one35euro bills, as well26458target user. Iron evidence, the success of the real sensitive information advertising experiment shows: at leastGDPRIt's really implemented2018Years.5Months ago,FacebookThere is a real use of sensitive information from users for business purposes. And based on the above statistical analysis, in the EU countries,FacebookViolations of the user's privacy are reached by the user73%。 There is great social harm to using user-sensitive preferences for advertising commercial activities. For example, a Nazi extremist group could passFBAdvertising management expands its organizational power by looking for ultra-Nazis in the way it advertises. For example, online fraudsters target user-sensitive preferences throughFBPost your own phishing ads to commit fraud. And related research shows that online fraud, there is9%the user will be hooked. Simple calculation of the cost of fraud, criminals take advantage of0.0147The euro can carry out a successful cyber fraud, converted into RMB but1Hair.6points, indicating that the cost of fraud through sensitive preferences is very low.

GDPRThe implementation of a certain extent to curb the large companies wanton invasion of user privacy sensitive data behavior, but can not completely eradicate the cancer. This.7Month.12DayFacebookIn the United States, the Federal Trade Commission was convicted of using improper practices to mishandling a user's personal information50$100 million in fines. It can be seen that the world's user privacy protection work still has a long way to go.

In order to better protect user privacy, you can consider the following two measures:

(1Design and develop a browser plug-in that automatically changes preferences based on the semantics of user preferences generated by browsing the web, such as generating opposite semantic preferences over original preferences, or replacing specific preferences with coarse-grained preferences;

(2When advertising is published, the website advertising management system can use certain privacy protection methods (such as differential privacy) to quantitatively disturb the target user preferences of ads posted by advertisers, in order to achieve the effect of protecting user privacy.

Bibliography:

[1] J. G. Cabañas, Á. Cuevas and R. Cuevas, "Unveiling and Quantifying Facebook Exploitation of Sensitive Personal Data for Advertising Purposes," the 27th {USENIX} Security Symposium ({USENIX} Security 18), pp. 479-495, Baltimore, MD, USA, Aug15-17, 2018.

[2] G. Venkatadri, A. Andreou, Liu. Y, A. Mislove, K. P. Gummadi, P. Loiseau and O. Goga, "Privacy Risks with Facebook's PII-based Targeting: Auditing a Data Broker's Advertising Interface," In 39th IEEE Symposium on Security and Privacy (S&P'18), pp. 89-107, San Francisco, CA, USA, May 21-23, 2018.

Author's Introduction: Wang Mengyuan, Master's degree in Mobile Computing and Network Big Data Laboratory, Hunan University, is mainly interested in mobile privacy protection.

Know.NESTFor more research updates in the lab, follow us on our website: www.nest-lab.com

Send to the author