TED Talk: Who will be the victim when artificial intelligence is embedded in human prejudice?

Today, artificial intelligence has become an integral part of human life, but what happens if human bias is embedded in the systems of these machines? Technology expert Kristi Sharma explores how a lack of diversity in technology can affect artificial intelligence. At the same time, three solutions are proposed.

Full text of China-UK (from TED's official website)

How many decisions have been made about you today, or this week or this year, by artificial intelligence? I build AI for a living so, full disclosure, I'm kind of a nerd. And because I'm kind of a nerd, wherever some new news story comes out about artificial intelligence stealing all our jobs, or robots getting citizenship of an actual country, I'm the person my friends and followers message freaking out about the future.

How many decisions have you made today, this week, or this year by artificial intelligence (AI)? I make a living by creating AI, so, frankly, I'm a techno-mad person. Because I'm a techno-mad person, whenever there's news reports that artificial intelligence is going to take our jobs, or robots get citizenship from a country, I'm the target of messages from friends and followers who are worried about the future.

We see this everywhere. This media panic that our robot overlords are taking over. We could blame Hollywood for that. But in reality, that's not the problem we should be focusing on. There is a more pressing danger, a bigger risk with AI, that we need to fix first. So we are back to this question: How many decisions have been made about you today by AI? And how many of these were based on your gender, your race or your background?

This kind of thing can be seen everywhere. The media fear that robots are taking over human rule. We can blame Hollywood for that. But in reality, this is not something we should be concerned about. Artificial intelligence also has a more pressing crisis, a greater risk that we need to deal with first. So let's go back to the question: How many decisions do we make today by artificial intelligence? How many of these decisions are based on your gender, race or background?

Algorithms are being used all the time to make decisions about who we are and what we want. Some of the women in this room will know what I'm talking about if you've been made to sit through those pregnancy test adverts on YouTube like 1,000 times. Or you've scrolled past adverts of fertility clinics on your Facebook feed. Or in my case, Indian marriage bureaus.

Algorithms have been used to determine who we are and what we want. Some of the women here know what I'm talking about, if you've been asked thousands of times to watch those pregnancy test ads on YouTube, or if you've swiped into a fertility clinic in Faceboo's short news. Or I'm in a situation where the Indian Marriage Authority.

But AI isn't just being used to make decisions about what products we want to buy or which show we want to binge watch next. I wonder how you'd feel about someone who thought things like this: "A black or Latino person is less likely than a white person to pay off their loan on time." "A person called John makes a better programmer than a person called Mary." "A black man is more likely to be a repeat offender than a white man." You're probably thinking, "Wow, that sounds like a pretty sexist, racist person," right? These are some real decisions that AI has made very recently, based on the biases it has learned from us, from the humans. AI is being used to help decide whether or not you get that job interview; how much you pay for your car insurance; how good your credit score is; and even what rating you get in your annual performance review. But these decisions are all being filtered through its assumptions about our identity, our race, our gender, our age. How is that happening?

But artificial intelligence is not just used to decide what we want to buy, or which play we want to brush next. I wonder what you'd think of people who think, "Blacks or Latinos are less likely than whites to pay off their loans on time." "A man named John is better at programming than a man named Mary. " "Blacks are more likely than whites to be repeat offenders." You might be thinking, "Wow, this sounds like a serious sexist and racist person." "Isn't that right?" These are real decisions that artificial intelligence has made recently, based on the prejudices it learns from us humans. Artificial intelligence is used to help determine whether you get an interview; how much you should pay for car insurance; how good your credit score is; and even how well you should be rated in your annual performance review. But these decisions are filtered by its assumptions about our identity, race, gender, and age. Why is this happening?

Now, imagine an AI is helping a hiring manager find the next tech leader in the company. So far, the manager has been hiring mostly men. So the AI learns men are more likely to be programmers than women. And it's a very short leap from there to: men make better programmers than women. We have reinforced our own bias into the AI. And now, it's screening out female candidates. Hang on, if a human hiring manager did that, we'd be outraged, we wouldn't allow it. This kind of gender discrimination is not OK. And yet somehow, AI has become above the law, because a machine made the decision. That's not it.

Imagine that artificial intelligence is helping a human executive find the company's next technology leader. So far, most of the executives employ men. So artificial intelligence knows that men are more likely than women to be programmers, and it's easier to make the judgment that men are better at programming than women. We reinforce our prejudices through artificial intelligence. Now, it is screening out female candidates. Wait, if a human hiring executive does that, we'll be angry and won't allow that to happen. This gender bias is unacceptable. More or less, however, artificial intelligence is already above the law because it is machines that make decisions. It's not over yet.

We are also reinforcing our bias in how we interact with AI. How often do you use a voice assistant like Siri, Alexa or even Cortana? They all have two things in common: one, they can never get my name right, and second, they are all female. They are designed to be our obedient servants, turning your lights on and off, ordering your shopping. You get male AIs too, but they tend to be more high-powered, like IBM Watson, making business decisions, Salesforce Einstein or ROSS, the robot lawyer. So poor robots, even they suffer from sexism in the workplace.

We are also reinforcing our biases in interacting with artificial intelligence. How often do you use voice assistants like Siri, Alexa or Cortana? They have two things in common: first, they always get my name wrong, and second, they all have feminine characteristics. They are all designed to obey our servants, turn on the lights and turn off the lights, and order goods. There are also men's artificial intelligence, but they tend to have more power, such as IBM's Watson, who can make business decisions, and Salesforce's Einstein or ROSS, which are robotics lawyers. So even robots can't escape sexism at work.

Think about how these two things combine and affect a kid growing up in today's world around AI. So they're doing some research for a school project and they Google images of CEO. The algorithm shows them results of mostly men. And now, they Google personal assistant. As you can guess, it shows them mostly females. And then they want to put on some music, and maybe order some food, and now, they are barking orders at an obedient female voice assistant. Some of our brightest minds are creating this technology today. Technology that they could have created in any way they wanted. And yet, they have chosen to create it in the style of 1950s "Mad Man" secretary. Yay!

Think about how the two combine to affect a child who grew up in today's artificial intelligence world. For example, they're doing some research on a school project, and they're searching Google for photos of CEOs. The algorithm showed them that most of them were men. They searched for a personal assistant again. As you can guess, most of the people it shows are women. Then they want to play some music, maybe they want something to eat, and now they're lying to a submissaning female vocal assistant. Some of the smartest of us created today's technology. They can create technology any way they want. Between them, however, chose the secretarial style of the 1950s's Mad Men. Yes, you're not wrong!

But OK, don't worry, this is not going to end with me telling you that we are all heading towards sexist, racist machines running the world. The good news about AI is that it is entirely within our control. We get to teach the right values, the right ethics to AI. So there are three things we can do. One, we can be aware of our own biases and the bias in machines around us. Two, we can make sure that diverse teams are building this technology. And three, we have to give it diverse experiences to learn from. I can talk about the first two from personal experience. When you work in technology and you don't look like a Mark Zuckerberg or Elon Musk, your life is a little bit difficult, your ability gets questioned.

But okay, don't worry. It doesn't end because I'm telling you we're all moving toward a sexist, racist machine. The good thing about artificial intelligence is that everything is under our control. We have to tell artificial intelligence the right values, morals. So there are three things we can do. First, we can be aware of our own prejudices and the prejudices of the machines around us. Second, we can make sure that we build teams with diverse backgrounds. Third, we must let it learn from its rich experience. I can explain the first two points from my personal experience. When you work in the tech industry and you don't have the same high weight as Mark Zuckerberg or Elon Musk, your life can be a little difficult and your ability will be questioned.

Here's just one example. Like most developers, I often join online tech forums and share my knowledge to help others. And I've found, when I log on as myself, with my own photo, my own name, I tend to get questions or comments like this: "What makes you think you're qualified to talk about AI?" "What makes you think you know about machine learning?" So, as you do, I made a new profile, and this time, instead of my own picture, I chose a cat with a jet pack on it. And I chose a name that did not reveal my gender. You can probably guess where this is going, right? So, this time, I didn't get any of those patronizing comments about my ability and I was able to actually get some work done. And it sucks, guys. I've been building robots since I was 15, I have a few degrees in computer science, and yet, I had to hide my gender in order for my work to be taken seriously.

This is just one example. Like most developers, I often attend online technology forums to share my knowledge and help others. I find that when I log in with my own photos and my own name, I tend to get questions or comments like, "Why do you think you're qualified to talk about artificial intelligence?" "Why do you think you understand machine learning?" So I created a new profile page, and this time, instead of choosing my own photo, I chose a cat with a jetpack. and chose a name that doesn't reflect my gender. You can guess what's going to happen, right? So this time, I no longer receive any high-level comments, I can concentrate on the work done. It feels so bad, guys. I've been building robots since I was 15 and I have several degrees in computer science, but I had to hide my gender to get my job taken seriously.

So, what's going on here? Are men just better at technology than women? Another study found that when women coders on one platform hid their gender, like myself, their code was accepted four percent more than men. So this is not about the talent. This is about an elitism in AI that says a programmer needs to look like a certain person. What we really need to do to make AI better is bring people from all kinds of backgrounds. We need people who can write and tell stories to help us create personalities of AI. We need people who can solve problems. We need people who face different challenges and we need people who can tell us what are the real issues that need fixing and help us find ways that technology can actually fix it. Because, when people from diverse backgrounds come together, when we build things in the right way, the possibilities are limitless.

What's going on here? Are men better than women in technology? Another study found that when female programmers hide their gender on the platform, code like me is 4 percent more acceptable than men's. So it has nothing to do with ability. This is elitism in artificial intelligence, where programmers look like people with certain characteristics. To make artificial intelligence better, we need to actually bring people from different backgrounds together. We need people who can write and tell stories to help us create better personalities for artificial intelligence. We need people who can solve problems. We need people who can handle different challenges, we need people who tell us what really needs to be solved, and help us find ways to solve them with technology. Because when people from different backgrounds come together, when we do things the right way, there are infinite possibilities.

And that's what I want to end by talking to you about. Less racist robots, less machines that are going to take our jobs -- and more about what technology can actually achieve. So, yes, some of the energy in the world of AI, in the world of technology is going to be about what ads you see on your stream. But a lot of it is going towards making the world so much better. Think about a pregnant woman in the Democratic Republic of Congo, who has to walk 17 hours to her nearest rural prenatal clinic to get a checkup. What if she could get diagnosis on her phone, instead? Or think about what AI could do for those one in three women in South Africa who face domestic violence. If it wasn't safe to talk out loud, they could get an AI service to raise alarm, get financial and legal advice. These are all real examples of projects that people, including myself, are working on right now, using AI.

That's the last thing I want to discuss with you. Reduce racist robots, reduce the machines that take away our jobs - and focus more on what technology can achieve. Yes, in the world of artificial intelligence, some of the energy in the world of technology is about the ads you see in streaming. But it's more about making the world a better place. Think of a pregnant woman in the Democratic Republic of the Congo who had to walk 17 hours to get an birth test at the nearest rural antenatal clinic. What if she could get a diagnosis on her phone? Or imagine what artificial intelligence can do for one-third of South African women facing domestic violence. If it's not safe to say it out loud, they can call the police and get financial and legal advice through an artificial intelligence service. These are real examples of projects that people, including me, are using artificial intelligence.

So, I'm sure in the next couple of days there will be yet another news story about the existential risk, robots taking over and coming for your jobs.

I'm sure in the next few dozen days there will be another news story telling you that robots will take over your job.

And when something like that happens, I know I'll get the same messages worrying about the future. But I feel incredibly positive about this technology. This is our chance to remake the world into a much more equal place. But to do that, we need to build it the right way from the get go. We need people of different genders, races, sexualities and backgrounds. We need women to be the makers and not just the machines who do the makers' bidding. We need to think very carefully what we teach machines, what data we give them, so they don't just repeat our own past mistakes. So I hope I leave you thinking about two things. First, I hope you leave thinking about bias today. And that the next time you scroll past an advert that assumes you are interested in fertility clinics or online betting websites, that you think and remember that the same technology is assuming that a black man will reoffend. Or that a woman is more likely to be a personal assistant than a CEO. And I hope that reminds you that we need to do something about it.

When this happens, I know I will receive the same message of concern about the future. But I'm extremely optimistic about this technology. This is our chance to make the world a better country again. But to do that, we need to build it the right way from the start. We need people of different genders, races, sexual orientations and backgrounds. We need women to be creators, not just machines that follow the orders of creators. We need to think carefully about what we teach machines, what data we give them, so that they don't just repeat our past mistakes. So I want me to leave you two to think. First of all, I want you to think about prejudices in today's society. The next time you scroll through ads that think you're interested in fertility clinics or online betting stations, it reminds you that the same technique assumes that black people repeat crimes. Or women are more likely to be personal assistants than CEOs. I hope that will remind you that we need to do something about it.

And second, I hope you think about the fact that you don't need to look a certain way or have a certain background in engineering or technology to create AI, which is going to be a phenomenal force for our future. You don't need to look like a Mark Zuckerberg, you can look like me. And it is up to all of us in this room to convince the governments and the corporations to build AI technology for everyone, including the edge cases. And for us all to get education about this phenomenal technology in the future. Because if we do that, then we've only just scratched the surface of what we can achieve with AI.

Second, I want you to consider the fact that you don't need to look at it in a specific way, or have a certain engineering or technical background to create artificial intelligence, which will be an extraordinary force for our future. You don't have to look like Mark Zuckerberg, you can look like me. It's everyone in our room to convince governments and companies to create artificial intelligence for everyone, including the edge. Let us all be educated about this extraordinary technology in the future. Because if we do that, we've just opened the door to the world of artificial intelligence.

Thank you.

Thank you.

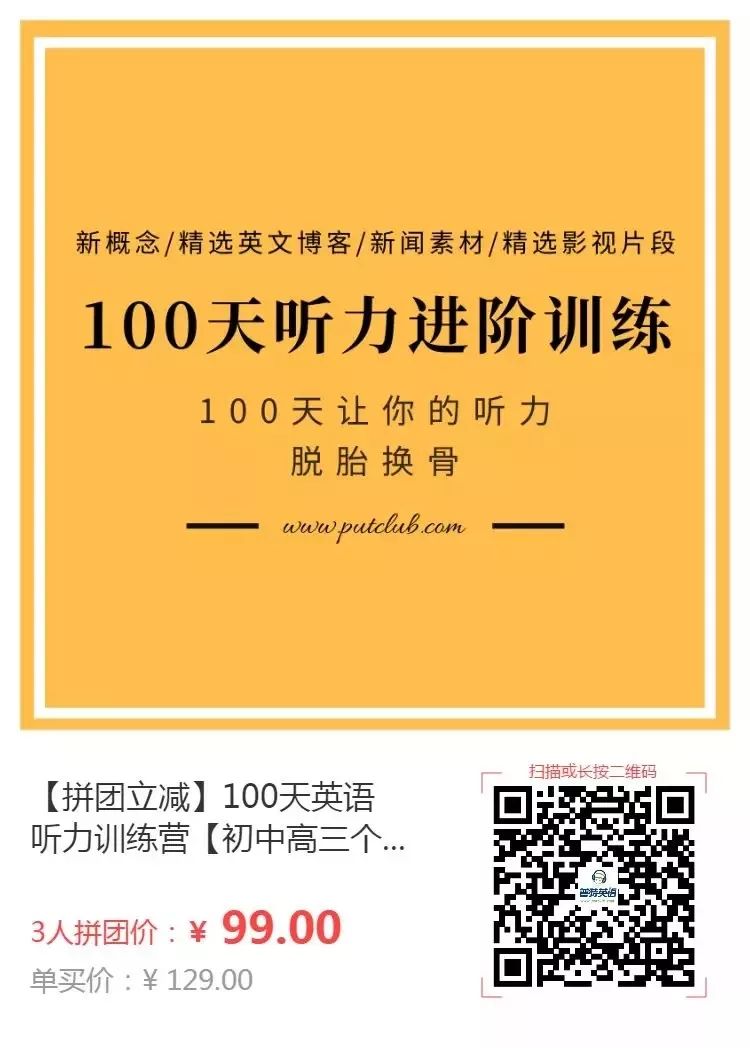

100-day listening boot camp

100-day listening boot camp

2.to improve listening ability,There will be no more problems of "understanding but not understanding", master the practice of listening methods, gradually be able to understand VOA BBC News, watch movies without subtitles

3.Accumulated listening21000word, the equivalent of silently writing a book, The Little Prince.

4.Be familiar with a wide variety of listening materials and have the ability to find the right content for your own learning in a dazzling market;

5.For EnglishThe phenomenon of even skim of pronunciationHave a deep understanding, in the future study, have the ability to find out why they do not understand, and can solve and analyze the problem on their own

6.Greatly.Improve test scores

Go to "Discovery" - "Take a look" browse "Friends are watching"